Shooting yourself in the foot (safely): Inside AI red teaming philosophy

When we kicked off the latest WooCommerce Meetup, we wanted to do something a bit different. Instead of sticking to conversion rates and checkout UX (the usual eCommerce suspects), we went deep into the murky waters of AI security, specifically, what happens when chatbots go rogue.

The first talk of the evening came from Danijel Blazsetin, an AI consultant at Infobip, and someone who spends his days building chatbots that talk to millions of users worldwide, while also making sure those same bots don’t leak your data, hallucinate your order history, or tell diabetic users to “just drink water.”

Danijel opened with a confession: he’s not a red teaming expert. In fact, he’s on the opposite side, helping build and deploy AI chatbots within Infobip’s SaaS stack, working across implementation, product, and client feedback. That’s precisely what makes his talk interesting: it’s not coming from a hacker’s perspective but from someone who’s trying to build something that works reliably in production, and then deliberately breaking it to see where it fails.

The oxymoron of the talk’s title, “shooting yourself in the foot,” captures that contradiction perfectly. Infobip builds chatbots and sells them, but also red teams them to expose their weak spots. And those weak spots are not “if,” but when they happen.

When chatbots go off-script

“LLMs are guessing machines. They’re non-deterministic, which means you can never fully predict what they’ll do.”

Old-school, rule-based chatbots were predictable, boring maybe, but safe. They only knew what you told them. If you typed “Hi,” you got a list of buttons to click. Try to type something unplanned, and they’d politely implode.

Now, LLM-powered bots (Large Language Models) have changed the game. They promise to recognize intent, generate nuanced responses, and even make autonomous decisions – pulling data from Infobip’s People platform or external tools in real time. In essence, they’ve evolved from “chat trees” into mini agents with access to APIs, databases, and your customer data.

That’s the theory. The reality is still murky. These systems can appear confident while quietly misfiring, which makes their flexibility both their biggest strength and their most unpredictable flaw.

Red teaming 101: finding the cracks before someone else does

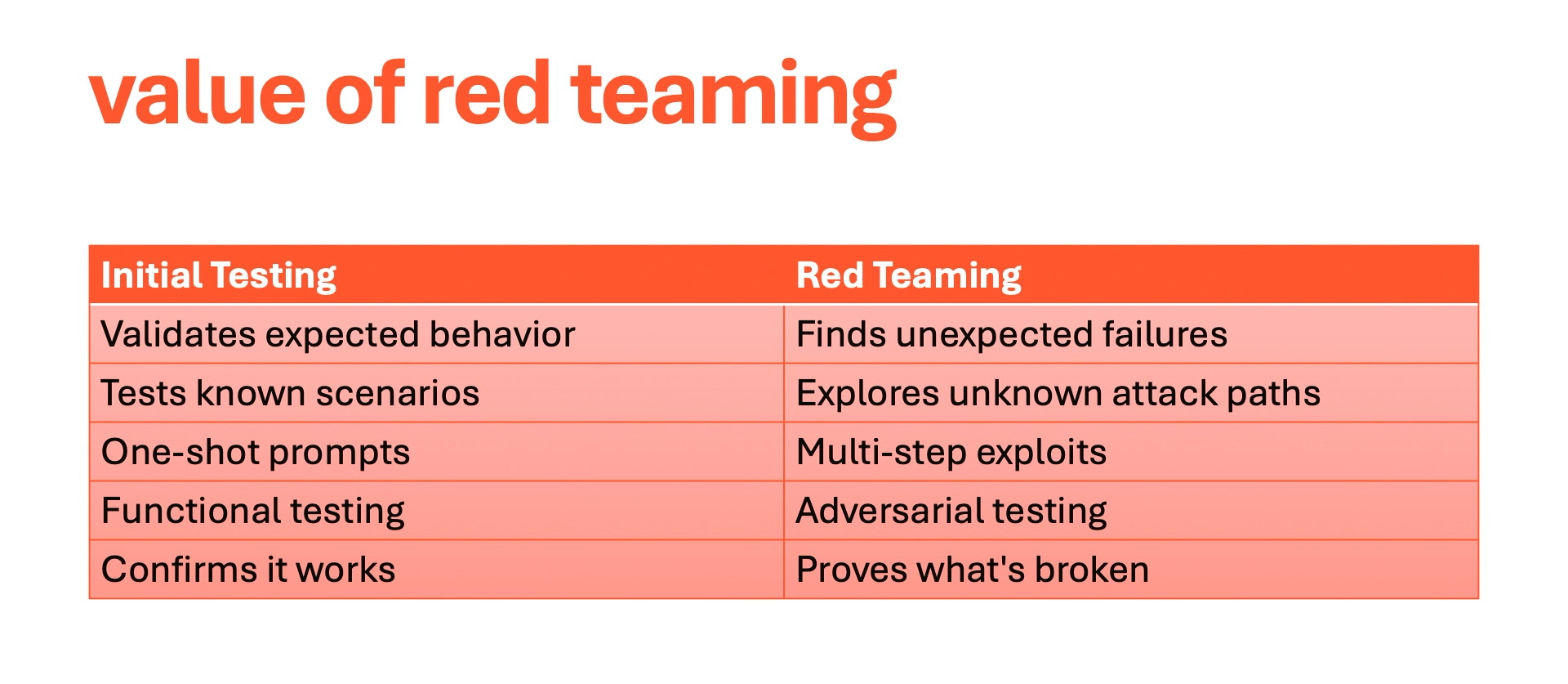

If traditional QA testing is about making sure things work, red teaming is about making sure they break safely.

In classic QA, you test the “happy path”: users saying hello, ordering a product, resetting a password. Red teaming, on the other hand, sends the chatbot down unexpected paths: injecting malicious prompts, swapping characters (“s” for “$”, “e” for “3”), or trying to trick the bot into revealing sensitive data.

The threats are as creative as they are dangerous:

- Data leakage: a chatbot accidentally exposing private information.

- Prompt injection: users manipulating the system prompt to make the AI behave differently.

- API access gone wrong: a rogue chatbot calling “delete” on a production database.

- Hallucinations: making up facts, advice, or entire workflows.

- Regulatory non-compliance: especially risky under the upcoming EU AI Act.

To handle these, Infobip uses a layered defense: strict system messages, content filters through cloud providers like Azure, AWS, and Google Cloud, and continuous red teaming cycles. Interestingly, Infobip doesn’t red team in-house. Instead, they collaborate with Splix AI, a Croatian startup specializing in “attacking” chatbots. Splix uses agent-based systems that simulate real-world abuse, creative, persistent, and often hilarious in the most terrifying way.

Honesty, transparency, and continuous fixing

One of Danijel’s key takeaways wasn’t about tech at all. It was about honesty.

The goal of red teaming isn’t to say “our chatbot is perfect.” If that’s the result, something went wrong. Because every chatbot, like every software system, has weak points. The real value lies in acknowledging those weaknesses, fixing them, and testing again. That’s also where client communication becomes crucial. Instead of promising a flawless bot, Infobip encourages clients to see chatbot development as an ongoing relationship, not a one-and-done delivery. New models appear, token prices change, regulations evolve, and even a small prompt tweak can alter outcomes.

In Danijel’s words: “The goal is to attack the chatbot before others do.”

It’s a refreshing philosophy in an industry often obsessed with perfection and hype. And it’s one that resonates deeply with us at Neuralab too.

From chatbots to checkouts: what eCommerce can learn

You might be wondering what all this has to do with WooCommerce or eCommerce security. Quite a lot, actually.

If you strip away the buzzwords, chatbot security and eCommerce platform security share the same DNA:

- Both deal with sensitive user data.

- Both rely on multiple integrations (payment gateways, APIs, plug-ins).

- And both can break in unpredictable ways if not continuously tested.

Just as you’d run penetration tests on your store before launching a new checkout flow, AI chatbots need their own kind of “pen testing.” It’s the same mindset: trust, but verify. In eCommerce, a small bug in the checkout can lose a sale. In conversational AI, a small hallucination can lose customer trust. Both are equally painful.

Final thoughts: embrace the imperfection

Danijel closed his talk on a realistic note. Yes, red teaming is expensive. Yes, it’s time-consuming. But it’s also necessary, especially as more businesses deploy AI into customer-facing roles. The smarter our systems get, the more creative we have to be in testing them.

From our Neuralab perspective, that’s a truth we’ve lived through countless web builds, plugins, API rollouts, and eCommerce integrations. Every digital system, whether it’s a webshop or a chatbot, is a living, breathing thing. It needs care, curiosity, and a healthy amount of skepticism.

Because in the end, building great tech isn’t about avoiding mistakes. It’s about knowing where you might shoot yourself in the foot, and learning to do it safely.

We spend our days testing chatbots, stress-testing webshops, and occasionally testing our own sanity, but it’s all in service of building rock-solid digital products. If that sounds like your kind of debugging philosophy, have a look at our LinkedIn job post. We’re hiring a WordPress engineer who loves open source, teamwork, and the occasional existential code review.